Monday, 26 February 2024, Bengaluru, India

According to its most recent release, the Paris-based AI startup Mistral AI is progressively creating a rival to OpenAI and Anthropic. Mistral Large, the company’s new flagship large language model, will be released soon. Its reasoning powers are intended to compete with those of other high-end models, such as Claude 2 and GPT-4.

(Image Source: Techcrunch.com)

The business is releasing Le Chat, a new service alternative to ChatGPT and Mistral Large. The beta version of this chatbot is now accessible.

If you are unfamiliar with Mistral AI, the firm is more well-known for its capitalization table, which shows how quickly and outrageously it raised money to build the core AI models. In May 2023, the business was formally incorporated. Mistral AI raised $113 million in its seed phase a few weeks later. A $415 million investment round led by Andreessen Horowitz (a16z) was closed by the company in December.

Mistral AI was founded by former employees of Google’s DeepMind and Meta, and it initially marketed itself as an open-source AI startup. More extensive models from Mistral AI are available under a different license than its first model, which was made available to the public with access to model weights.

Since Mistral AI provides Mistral Large through a paid API with usage-based pricing, the company’s business model is beginning to resemble OpenAI’s. Querying Mistral Large costs $8 per million input tokens and $24 per million output tokens. Tokens, as used in artificial language jargon, are discrete word fragments. For instance, an AI model processing the term “TechCrunch” would separate it into the tokens “Tech” and “Crunch.”

Mistral AI can handle context windows of 32k tokens by default, typically more than 20,000 words in English. Mistral Large is compatible with German, Italian, French, and Spanish.

In contrast, the current cost of GPT-4 with a 32k-token context window is $60 for every million input tokens and $120 for every million output tokens. Thus, Mistral Large is 5–7.5 times less expensive than GPT-4–32k. Rapid change is the norm, and AI companies frequently adjust their prices.

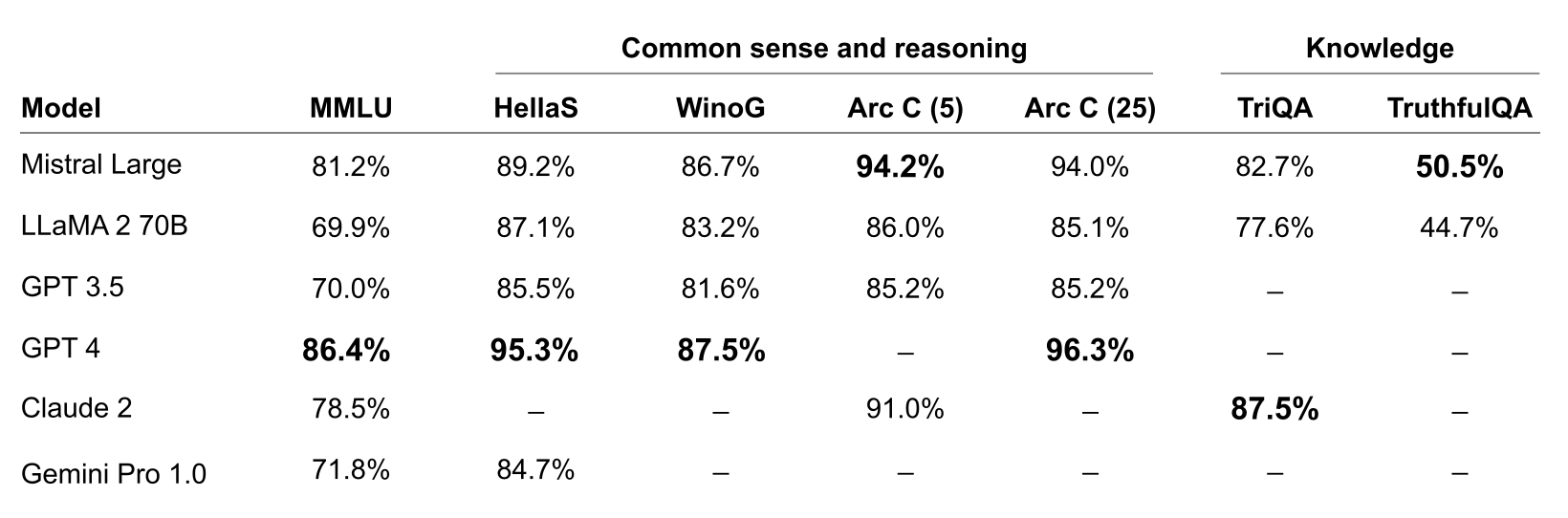

However, how does Mistral Large compare to Claude 2 and GPT-4? As usual, it isn’t easy to say. Based on multiple benchmarks, Mistral AI asserts that it comes second after GPT-4. However, there might be differences in real-life usage and some cherry-picking of benchmarks. We’ll need to do further research to find out how it does in our testing.

Today, Mistral AI is also introducing Le Chat, a chatbot. Visit chat.mistral.ai to sign up and give it a try. According to the corporation, there may be “quirks,” and it is now in beta testing.

Users can select from three alternative models: Mistral Small, Mistral Large, and Mistral Next, a prototype model intended to be brief and straightforward. Access to the service is free (for the time being). It’s also important to remember that Le Chat cannot access the internet while in use.

Additionally, the business intends to release a commercial version of Le Chat for business customers. Enterprise clients can designate moderation procedures in addition to central billing.

Lastly, Mistral AI is announcing a cooperation with Microsoft through today’s news dump. Microsoft will offer Mistral models to its Azure clients in addition to Mistral’s proprietary API platform.

It’s another model that isn’t all that significant in Azure’s model catalog. However, it also indicates that Microsoft and Mistral AI are now discussing possible joint ventures and other matters. The first advantage of the collaboration is that Mistral AI should be able to draw in additional clients thanks to this new avenue for distribution.

Microsoft is the primary investor in the capped-profit subsidiary of OpenAI. On its cloud computing platform, however, it has also welcomed other AI models. Microsoft and Meta, for example, have partnered to provide Llama big language models on Azure.

This open partnership strategy makes it possible to maintain Azure customers within its product ecosystem. Additionally, it can be beneficial in terms of anticompetitive monitoring.

(Information Source: Techcrunch.com)

Hi, I am Subhranil Ghosh. I enjoy expressing my feelings and thoughts through writing, particularly on trending topics and startup-related articles. My passion for these subjects allows me to connect with others and share valuable insights.