Introduction:

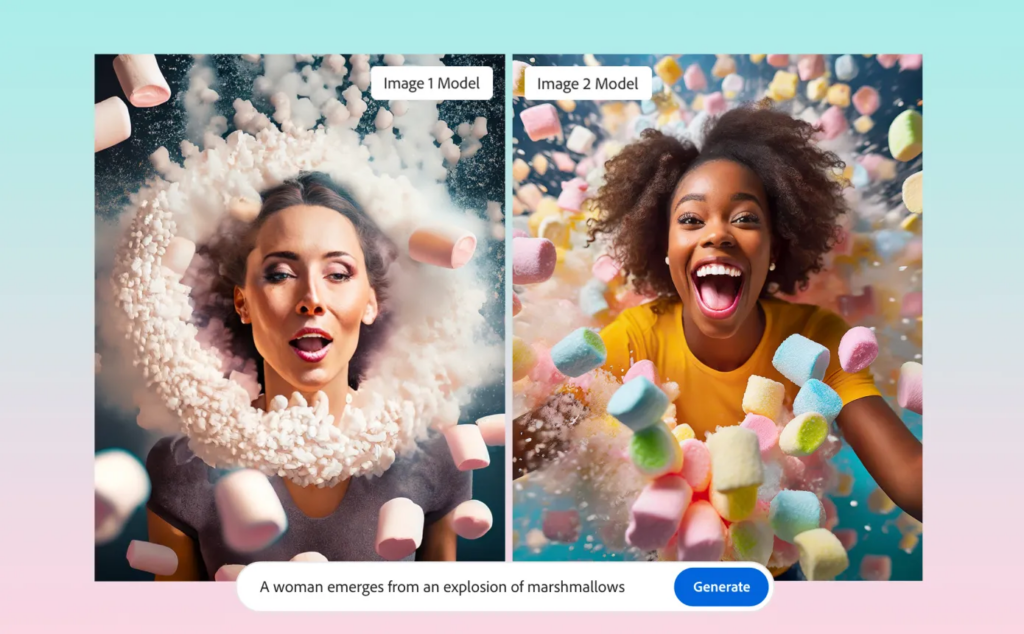

Adobe today said that it has revised the models that enable Firefly, its generative AI image creation service, during MAX, Adobe Firefly Can Now Generate More Realistic Images its annual conference for creatives. The Firefly Image 2 Model, as it’s formally known, will, in Adobe’s opinion, portray humans more accurately, including their facial features, skin, bodies, and hands (which have long plagued comparable models).

Adobe also said today that three billion photographs had been created by Firefly users since the service’s inception roughly six months ago, with one billion made only last month. 90% of Firefly users also have yet to gain experience with any of Adobe’s products. Most of these users undoubtedly utilize the Firefly online application, which helps to explain why the business opted to transform a Firefly demo site into a full-fledged Creative Cloud service a few weeks ago.

The new model was not only trained using more recent photos from Adobe Stock and other commercially safe sources, but it is also substantially larger, according to Alexandru Costin, Adobe’s VP for generative AI and Sensei. “Firefly is an ensemble of multiple models, and I think we’ve increased their sizes by a factor of three,” he told me. “So it’s like a brain that’s three times larger and will know how to make these connections and render more beautiful pixels, more beautiful details for the user.”

Additionally, the company nearly doubled the dataset, which should help the model better comprehend what users are asking for.

Although the bigger model certainly requires more resources, Costin remarked that it should operate at a similar speed to the smaller one. The distillation, pruning, optimization, and quantization are still the subjects of our research and investment. Ensuring clients have a similar experience requires much work, but we also try to keep cloud prices as low as possible. However, at the moment, Adobe is more concerned with quality than optimization.

The new model will initially be accessible through the Firefly online app. Still, it will soon be available in Creative Cloud products like Photoshop, which will power well-liked features like generative fill. Costin emphasized this as well. According to him, Adobe views generative AI more as productive editing than content creation.

Adobe Firefly Can Now Generate More Realistic Images:

Adobe Firefly Can Now Generate More Realistic Images [Source of Image: Techcrunch.com]

Our customers have shown a strong interest in leveraging Photoshop’s generative fill feature, primarily because it enables them to enhance existing assets such as photos or product shots, improving their overall workflows. Given our commitment to prioritizing this aspect for our users, we are using the term “more generative editing” under our umbrella to describe generative capabilities beyond just text-to-image functionality.

In addition to the updated model, Adobe introduces several options within the Firefly web app. These options empower users to fine-tune their photographs by adjusting the field of vision, motion blur, and depth of field. A new auto-complete function for when you write your prompts (which Adobe claims is optimized to help you get to a better image), as well as the ability to upload an existing idea and then have Firefly replicate the style of that image, are other new additions.

Sai Sandhya is Known for her expertise in Trending News and Startup News. She is passionate about bringing Startup Stories to life. Her proficiency in curating Listings and analyzing Case Studies adds exceptional value to the ever-evolving Business landscape.